SUMMARY

This is part 1 of the summary of a 2-day workshop, designed for interaction designers who are looking to explore the potential of low-code applications to create Agentic Large Language Models (LLMs) from design to deployment. It gives a roundup of the how’s and why’s of Agentic AI in digital products with as little coding as possible. Through a mix of theory and hands-on sessions, my students have learned how to prototype, implement, and deploy agentic LLMs, with the goal to:

• Gain a clear understanding of current LLM applications and their practical use cases beyond image- and text-generation.

• Develop a product roadmap for an example project, adaptable to their own ideas.

• Build and deploy a prototype.

Definitions

What is the difference between an AI Workflow and an Agent?

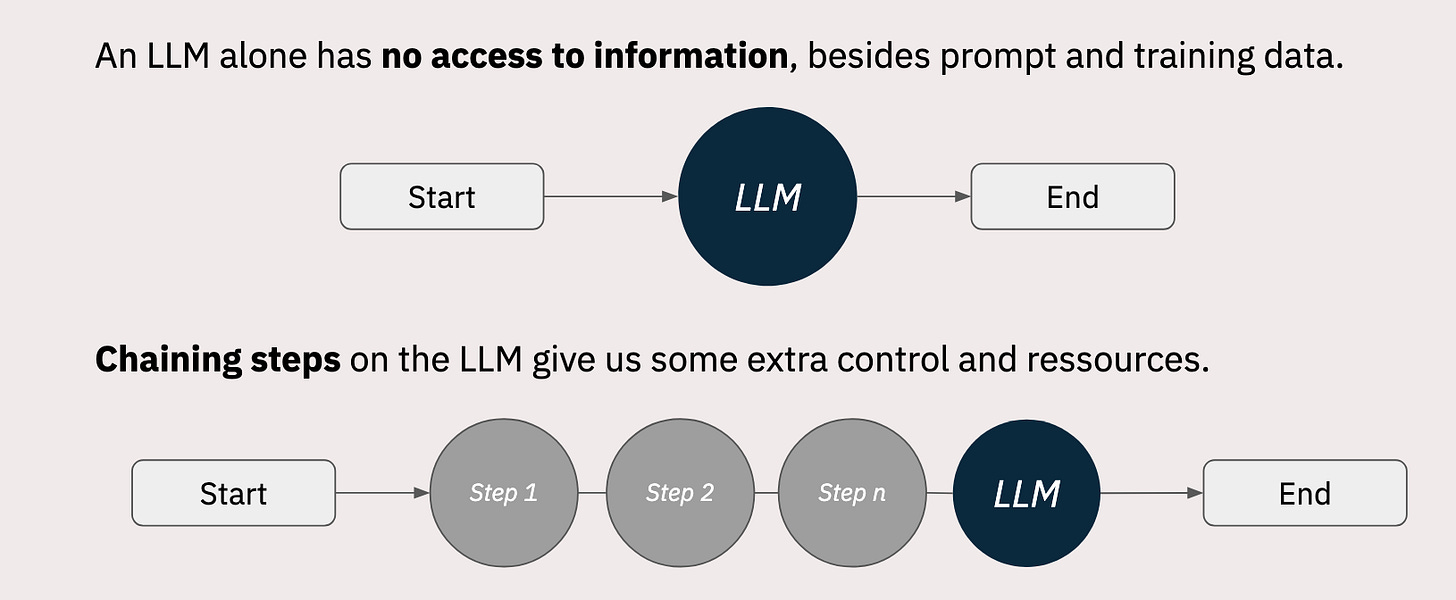

And why do we even need any of these two? While nummerical AI workflows have been in use for decades, automating repetitive processes and enhancing precision, LLM workflows popped up five years ago, with NLP based systems being around for longer. Let’s see what a vanilla LLM workflow can look like:

The LLM can be operated via API–you are for sure familiar with the ChatGPT interface. However, one can also go beyond the Prompt-Output logic, by chaining steps onto each other, implementing a controllable workflow that iterates the same process for all human-computer interactions, delivering a one-size-fits all approach. What could be the problem with that?

Some human-computer interactions are not foreseeable

Some machine-generated informations are made up (hallucinations)

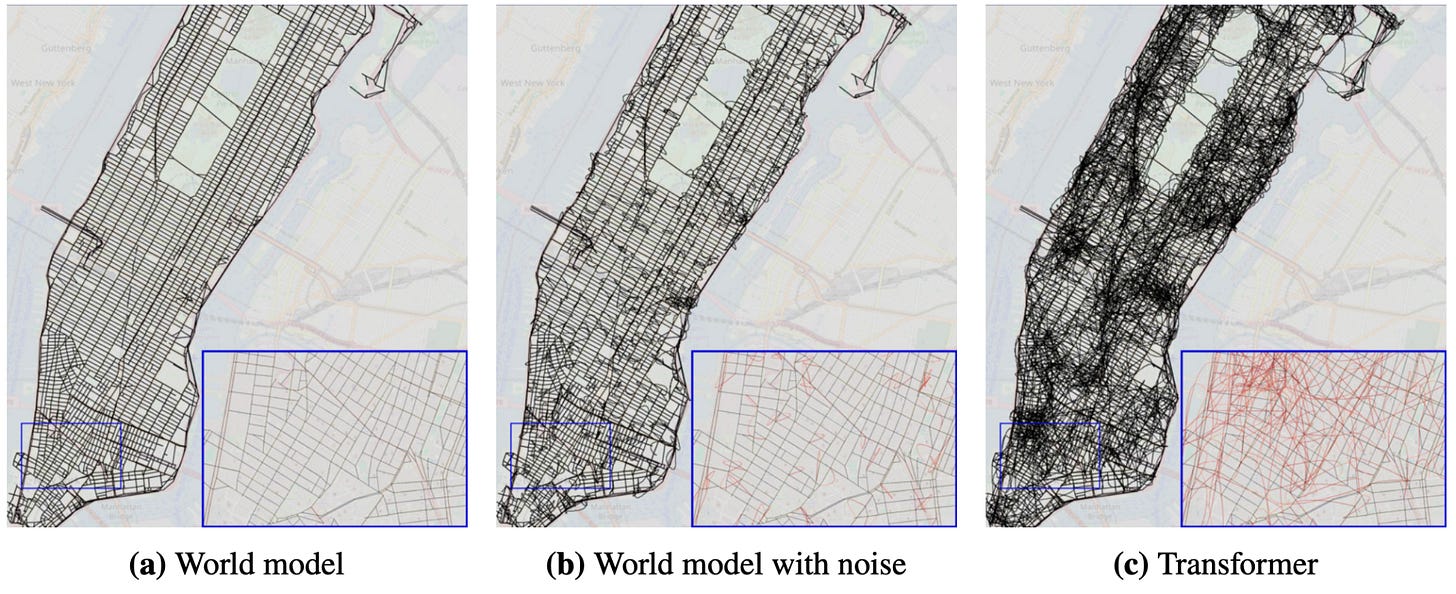

A recent (MIT, Harvard, Cornell) paper examines the capabilites of LLMs to generate maps, based on human descriptions of the environment. One could say that the models failed the task successfully: “When tasked with providing turn-by-turn driving directions in New York City, for example, LLMs delivered them with near-100% accuracy. But the underlying maps used were full of non-existent streets and routes when the scientists extracted them.” (Vafa et al., 2024)

While one could assume that texts describing an environment may easily be understood by fellow humans, a machine has no concept of what “the school is behind the church” should precisely mean. Incoherence creates not only fragility, but also causes the model to map streets above buildings, through parks etc. One cannot just use any generative model to solve subtly different tasks and LLMs are not a one-size fits all solution.

So, how did the LLM come up with these non-existing routes?

LLMs generate an internal “World Model” to understand the context – the underlying rules within these worlds are hard to assess (e.G. flyovers)

LLMs seeing a wider variety of possible steps, including “bad steps”

Errors, such as detours, cause the navigation models used by LLMs to fail

AI Agents are not like other Tools

The most important aspect of an agent is the ability to make autonomous (or human-assisted) decisions. Based on task description, an agent adapts:

workflow and iterations

whether and which tools and capabilities (sensors, websearch, RAG, advanced reasoning…) are being used

which data sources are needed to solve the task

collaborations with other agents

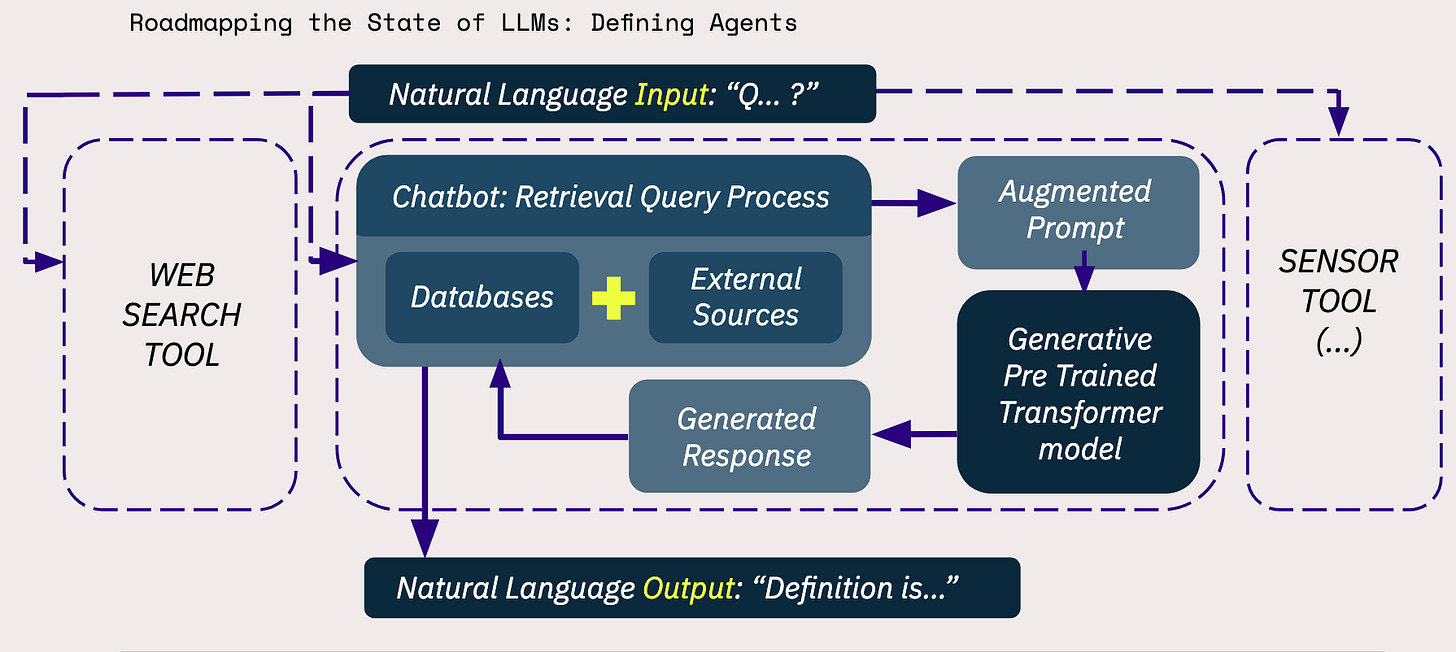

Agentic AI takes a whole different route when confronted with a new task and would try to execute it with specialized tools. Let’s see what that a workflow could look like in a LLM-powered application:

Every step between input and output is optional, however, it can be operated through natural language, just as a vanilla LLM. To summarize the findings:

AI Workflow

Process-Oriented: Focuses on the execution of a sequence of tasks (e.g., model training, evaluation, deployment).

Deterministic: follows predefined steps and rules, even if they involve adaptive models.

Static or Semi-Dynamic: Once defined, workflows operate within a fixed framework, with parameters adjusted as needed.

Reproducible: produces consistent results when repeated with the same input.

Scope: addresses larger systems or projects (e.g., automating machine learning pipelines like in MLOps).

AI Agent

Autonomous: Acts independently, adapting to new inputs or environments.

Dynamic: Continuously learns and reacts to changes, potentially updating its strategy.

Goal-Oriented: Focused on achieving specific objectives, by interacting with users, systems, or other agents.

Interactive: Engages with its environment, which could include humans, software systems, or physical surroundings.

Adaptable: Uses techniques like reinforcement learning, natural language understanding, or multi-agent coordination.

Next article will feature the setup of LangGraph Studio to build an agentic AI workflow.